Ubisoft unveils Scalar: A cloud technology built to power the vast game worlds of tomorrow

In anticipation of this year’s Game Developers Conference (GDC), Ubisoft has revealed a groundbreaking cloud-based technology named ‘Scalar’ designed to provide “unprecedented freedom and scale” for future game titles. Developed by Ubisoft Stockholm, Scalar aims to eliminate technical constraints for game developers by offering virtually limitless computing power through a cloud-based approach.

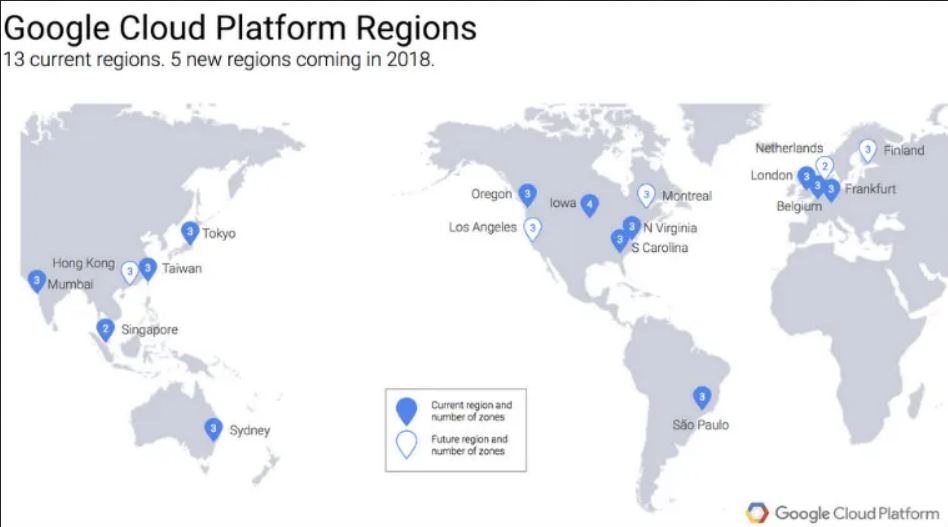

Addressing limitations on both the hardware and collaborative fronts, Ubisoft Scalar acts as a cloud-driven production tool or engine. It liberates game developers from the constraints of single-machine computing, transforming assets, animations, audio, and renders into distributed services. Unlike streaming services such as Xbox Cloud or Stadia, Scalar allows continuous world-building through an ongoing recreation setting. Games built on this platform will be “always running,” enabling live updates without requiring players to exit the game for patches.

Christian Holmqvist, Technical Director at Ubisoft Scalar, highlighted the potential for developers to create dynamic and evolving worlds, fostering a closer connection between players and game creators. Although the studio did not specify how Scalar handles glitches or crashes, it suggested that every player would experience similar incidents simultaneously, ensuring a unified gaming experience.

Scalar also innovatively separates the interlocking functions of a traditional game engine, preventing the butterfly effect where changes in one area have no impact on other aspects of the project. According to Holmqvist, this separation significantly impacts iteration speed, testing, and facilitates easy sharing of services or modifications.

Scalar is not positioned as a replacement for existing game engines but as an alternative to accelerate production and enhance real-time experiences. Ubisoft Stockholm is actively developing a new IP in conjunction with Scalar, leveraging a decentralized workforce spanning Kyiv, Malmo, Helsinki, and Bucharest. The studio is currently expanding its team, with open positions available for interested individuals. Further details about the new IP are yet to be disclosed.